In the fast-evolving world of AI development, staying ahead means constant innovation and strategic upgrades. Recently, amidst the buzz created by major releases from OpenAI and Google, Anthropic quietly introduced a noteworthy update to their AI lineup: Claude Opus 4.1. While not a revolutionary leap, this upgrade offers meaningful improvements, especially for developers and teams working with complex coding tasks and autonomous agents.

Alongside Claude Opus 4.1, Anthropic also rolled out significant enhancements to Claude Code, their AI-powered coding assistant. This new upgrade introduces automated security reviews that can detect and fix vulnerabilities like SQL injections, cross-site scripting (XSS), and insecure data handling directly from your terminal or GitHub pull requests. Together, these updates mark a strong step forward in AI-assisted software engineering.

In this comprehensive article, we’ll dive deep into what Claude Opus 4.1 brings to the table, how it performs on key benchmarks, its pricing considerations, and the exciting new features of Claude Code. Whether you’re a developer, an engineering team lead, or an AI enthusiast, this breakdown will help you understand why Anthropic’s latest offerings deserve your attention — and when you might want to consider alternatives.

Table of Contents

- Claude Opus 4.1: A Tactical Upgrade to Stay Competitive

- Performance Benchmarks: Where Claude Opus 4.1 Stands

- Pricing: Powerful but Expensive

- Real-World Demos: Showcasing Claude Opus 4.1’s Capabilities

- Getting Started with Claude Opus 4.1

- Claude Code Upgrade: Revolutionizing AI-Powered Code Security

- Final Thoughts: Is Claude Opus 4.1 Worth It?

- Frequently Asked Questions (FAQ)

- Stay Updated and Get Involved

Claude Opus 4.1: A Tactical Upgrade to Stay Competitive

Let’s be real — Claude Opus 4.1 isn’t a groundbreaking release that flips the AI landscape on its head. Instead, it feels like a tactical move by Anthropic to maintain relevance amid the flurry of announcements from OpenAI and Google. But don’t let that understate its value. Claude Opus 4.1 is a drop-in replacement for Opus 4.0, with several meaningful improvements that enhance hybrid reasoning, agentic workflows, and real-world coding applications.

One of the standout features is its 200,000 token context window. This expanded window enables the model to handle much larger inputs and maintain context over longer interactions, which is crucial for complex coding projects or autonomous agents that require multi-step reasoning.

While the upgrade may not have blown anyone away in terms of headline-grabbing new capabilities, it does deliver on quality. On the SWE-Bench Verified benchmark — a rigorous test designed to evaluate AI models on complex software engineering tasks — Claude Opus 4.1 achieved a roughly 2% performance improvement over its predecessor. That may sound small, but in the domain of AI coding assistants, this translates to fewer bugs, more precise outputs, and better multi-step reasoning.

In other words, Claude Opus 4.1 represents a clear refinement of Anthropic’s capabilities, particularly benefiting developers managing large codebases or teams building autonomous agents. It’s about polishing the model’s strengths to make it more reliable and effective in real-world scenarios.

Anthropic has also teased that this release might just be the beginning. They’ve announced plans for substantially larger improvements across all their models in the coming weeks, so we can expect even more powerful upgrades soon.

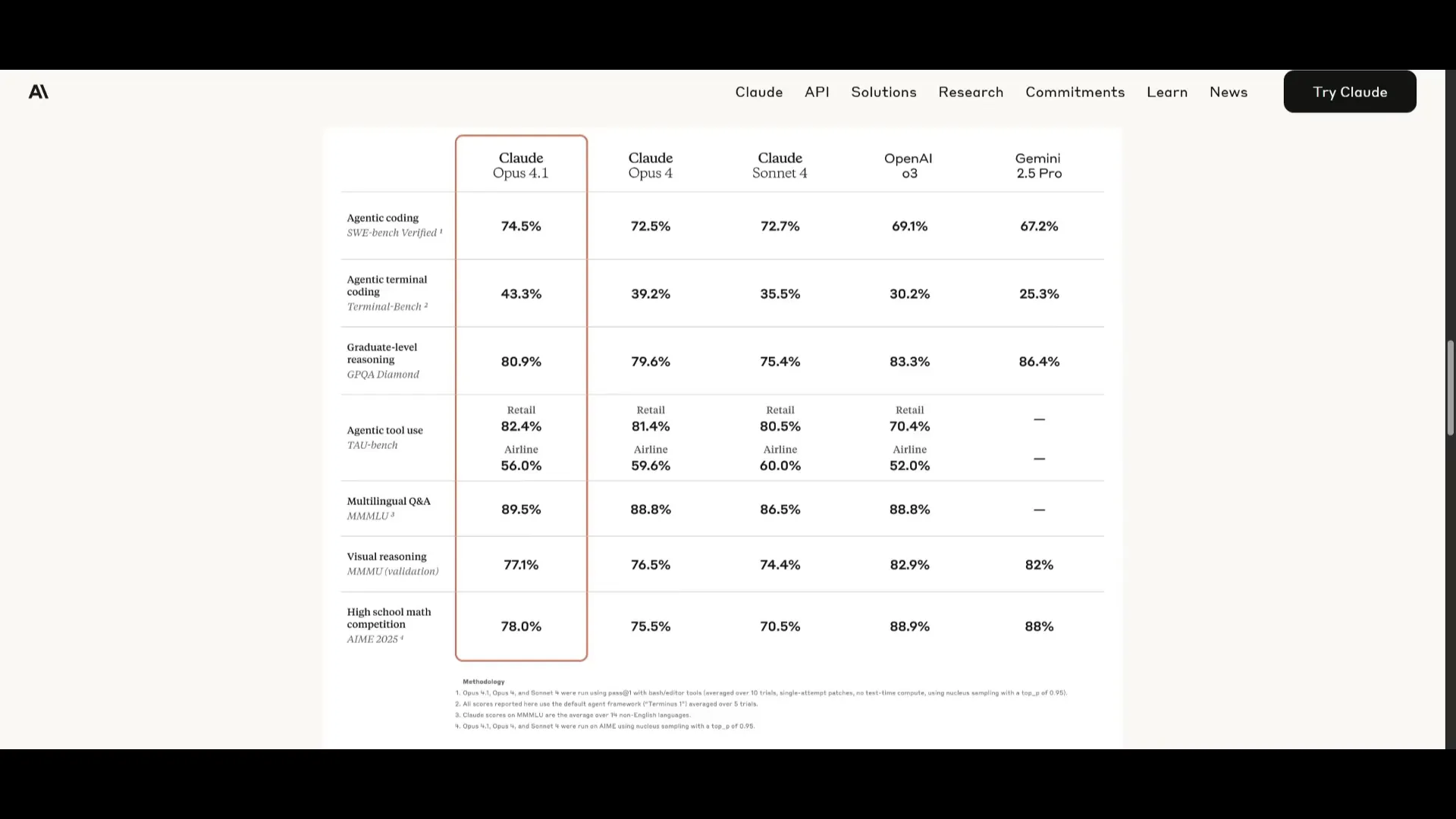

Performance Benchmarks: Where Claude Opus 4.1 Stands

When evaluating AI models for coding tasks, benchmarks offer a valuable window into their strengths and weaknesses. Claude Opus 4.1 shines particularly in coding-centric benchmarks such as Agensix coding, Agensix terminal coding, and graduate-level reasoning tests.

These benchmarks collectively demonstrate that Claude Opus 4.1 is a reputable and capable model in the coding domain. While it does not surpass the reasoning abilities of OpenAI’s latest models or Google’s Gemini 2.5 Pro, it holds its own — especially when it comes to coding tasks.

In terms of tool use and agentic workflows, Claude Opus 4.1 performs admirably, making it an excellent choice for developers who want AI models that can assist with interactive coding tasks and complex workflows.

However, when you step outside pure coding into areas like multilingual understanding, visual reasoning, or advanced mathematics, Claude Opus 4.1 delivers decent but not top-tier results. Models like OpenAI’s GPT-3 and Gemini 2.5 Pro still hold an edge in these broader capabilities.

How Does Claude Opus 4.1 Compare to Open Source Alternatives?

One important consideration is the rise of open source models such as Kumi K2 and GLM 4.5. These models are increasingly capable of matching the coding performance of proprietary models like Claude Opus 4.1, especially for front-end development and general codebase work.

For developers or teams mindful of budget constraints, these open source options might offer a more cost-effective alternative without sacrificing much in coding quality. That said, Claude Opus 4.1’s strength in agentic workflows and tool integration still makes it a compelling choice for certain use cases.

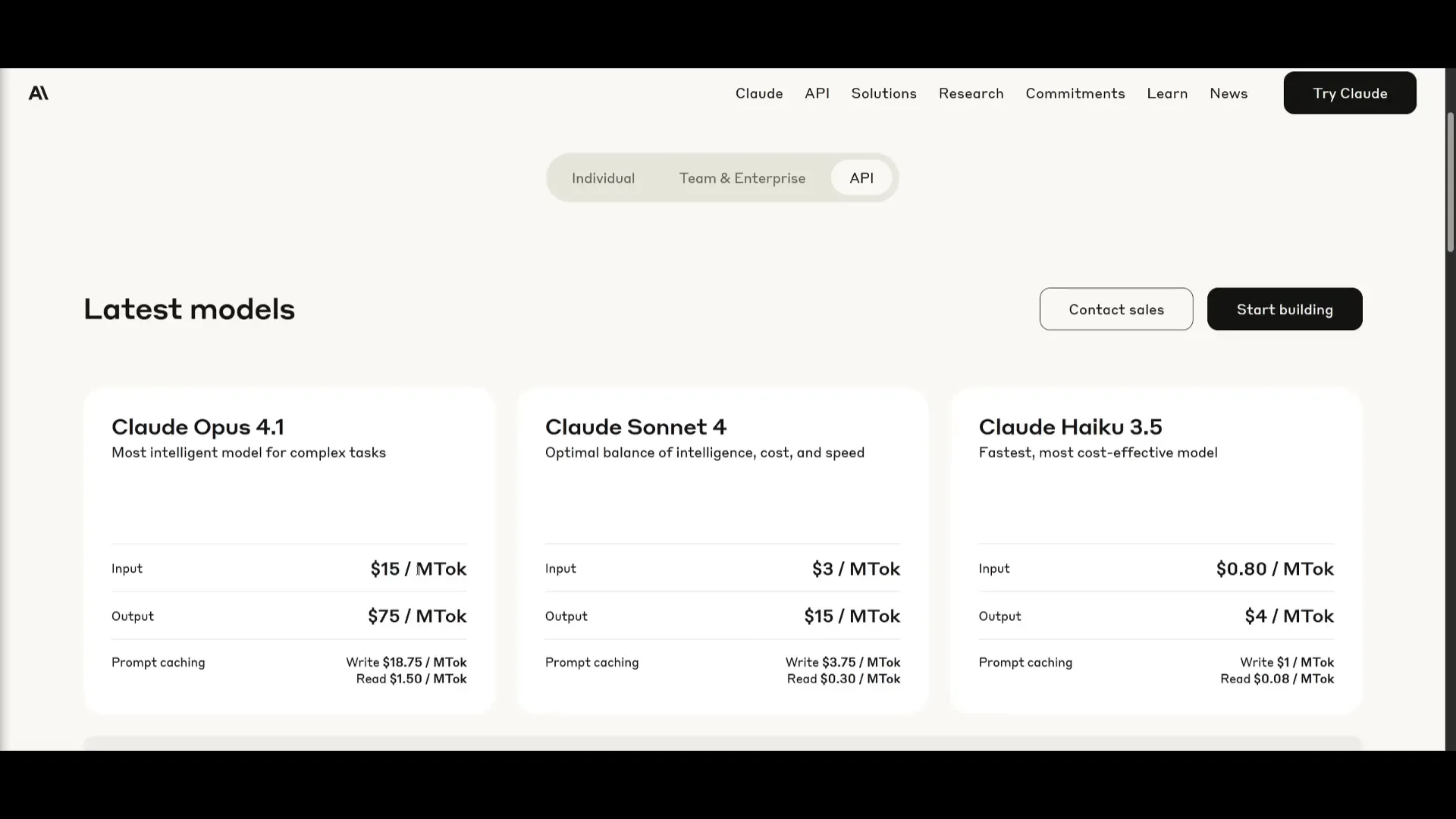

Pricing: Powerful but Expensive

One of the biggest challenges with Claude Opus 4.1 is its pricing. Anthropic has maintained the same cost structure as the previous Opus 4.0 model, which is on the higher end:

- $15 per 1 million input tokens

- $75 per 1 million output tokens

For many developers, especially those working on smaller projects or prototypes, this pricing might be prohibitive. However, for teams managing large codebases or requiring top-tier performance in critical applications, the cost may be justified.

A practical approach could be toggling between Claude Opus 4.1 and more affordable models like Claude Sonnet 4 within Anthropic’s Cloud Code environment. This flexibility allows developers to optimize for cost and performance based on the task at hand.

Real-World Demos: Showcasing Claude Opus 4.1’s Capabilities

To truly appreciate the power of Claude Opus 4.1, let’s look at some real-world demos that highlight its coding prowess and multi-step reasoning.

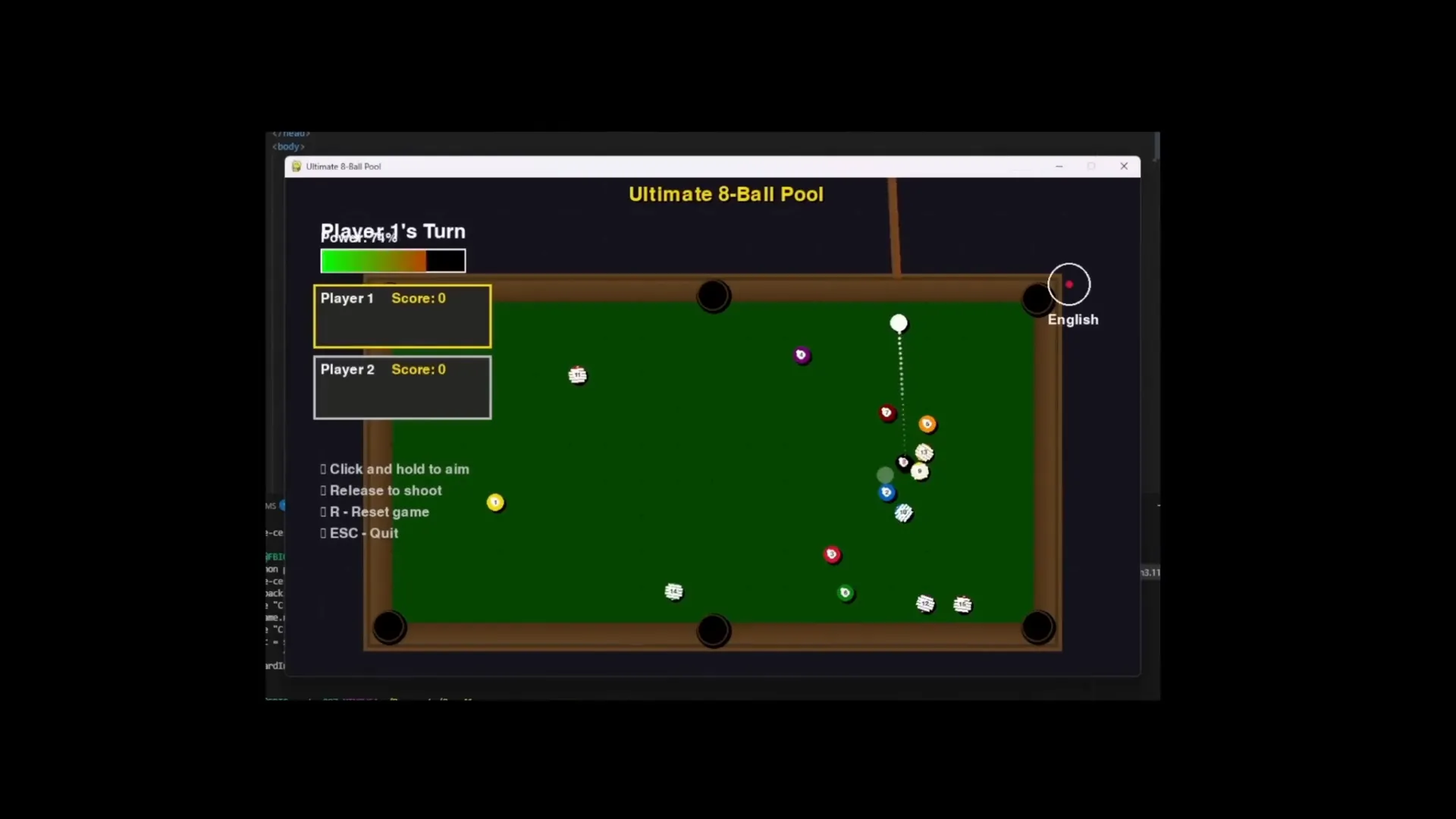

Building a Pool Game

In one impressive demonstration, Claude Opus 4.1 was tasked with creating a playable pool game. While the first iteration wasn’t perfect, multiple refinements led to a solid, functional version. This highlighted the model’s ability to engage in multi-step reasoning and agentic workflows, iteratively improving its output based on feedback.

Creating a Web OS Desktop App

Perhaps the most eye-opening demo involved instructing the model to build a functional web OS desktop application. Claude Opus 4.1 managed to replicate a desktop operating system interface complete with a working file manager, UI prototyping, and key OS components.

This feat, however, came at a considerable cost — roughly 2.7 million tokens input and 117,000 tokens output, totaling about $48.88. While the performance was top-tier, the expense clearly positions this kind of project beyond everyday use for many developers.

For many, using more affordable models like Kumi K2 or GLM 4.5 for front-end tasks and reserving Claude Opus 4.1 for backend or critical code generation might strike the best balance between cost and capability.

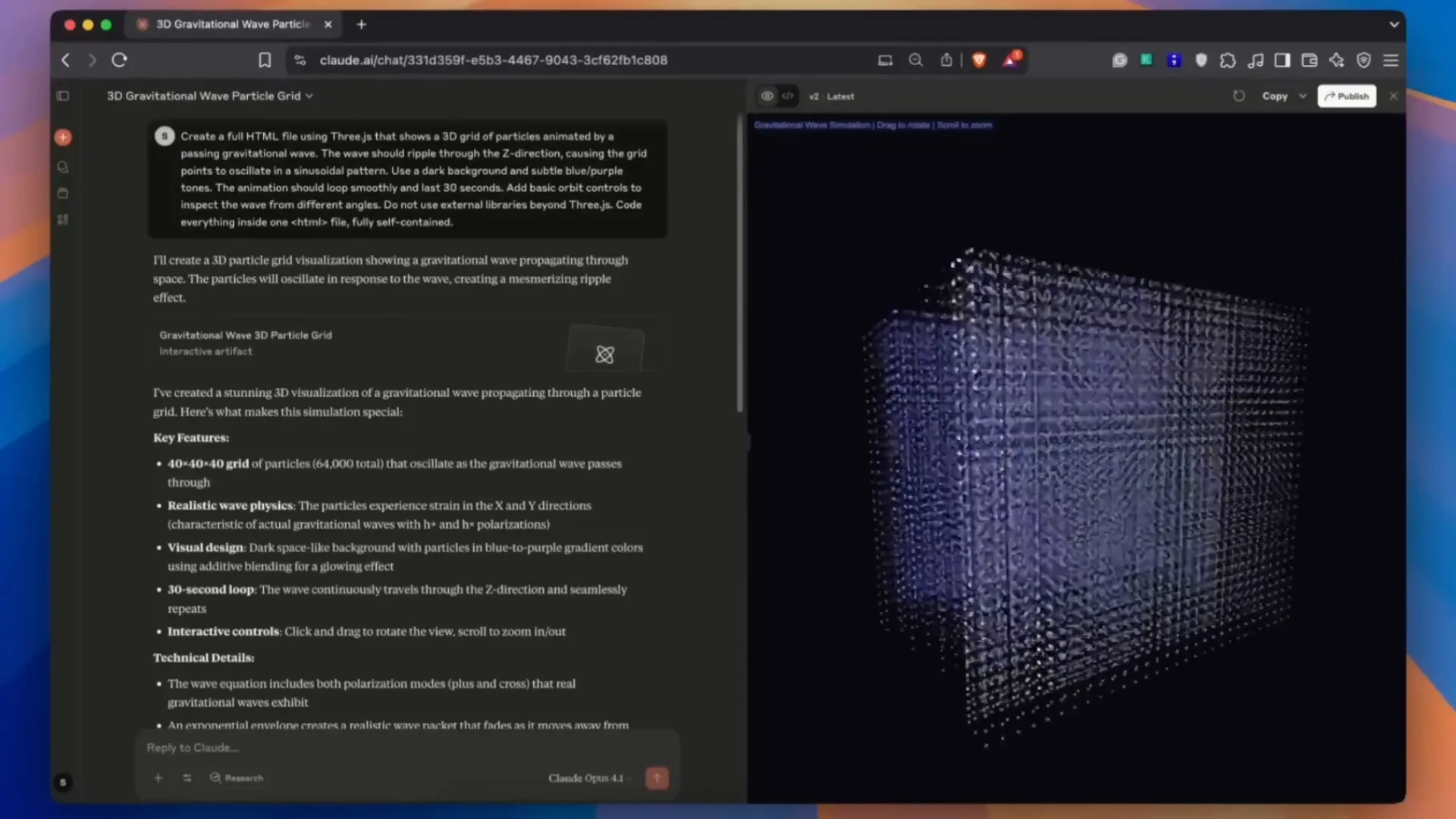

Generating Complex 3D Visualizations with Three.js

Another fascinating example showcased Claude Opus 4.1’s ability to generate a full HTML file featuring a 3D grid of particles animated by passing gravitational waves using Three.js. The quality and sophistication of this output surpassed what you’d typically get from other models like OpenAI’s GPT-3 or Gemini 2.5 Pro.

This demonstrates why Claude Opus 4.1 is considered ideal for agentic coding and tool use, especially when integrated within Anthropic’s Cloud Code environment.

Getting Started with Claude Opus 4.1

If you’re interested in trying out Claude Opus 4.1, there are several ways to get started:

- Anthropic API: Create an API key through an Anthropic API provider to integrate Claude Opus 4.1 into your projects.

- Cloud Subscription: Use Claude Opus 4.1 directly within Anthropic’s chatbot if you have a Cloud subscription.

- Third-Party Providers: Access the model through platforms like OpenRouter and other service providers.

These options make it flexible for developers to experiment with the model and incorporate it into their workflows.

Claude Code Upgrade: Revolutionizing AI-Powered Code Security

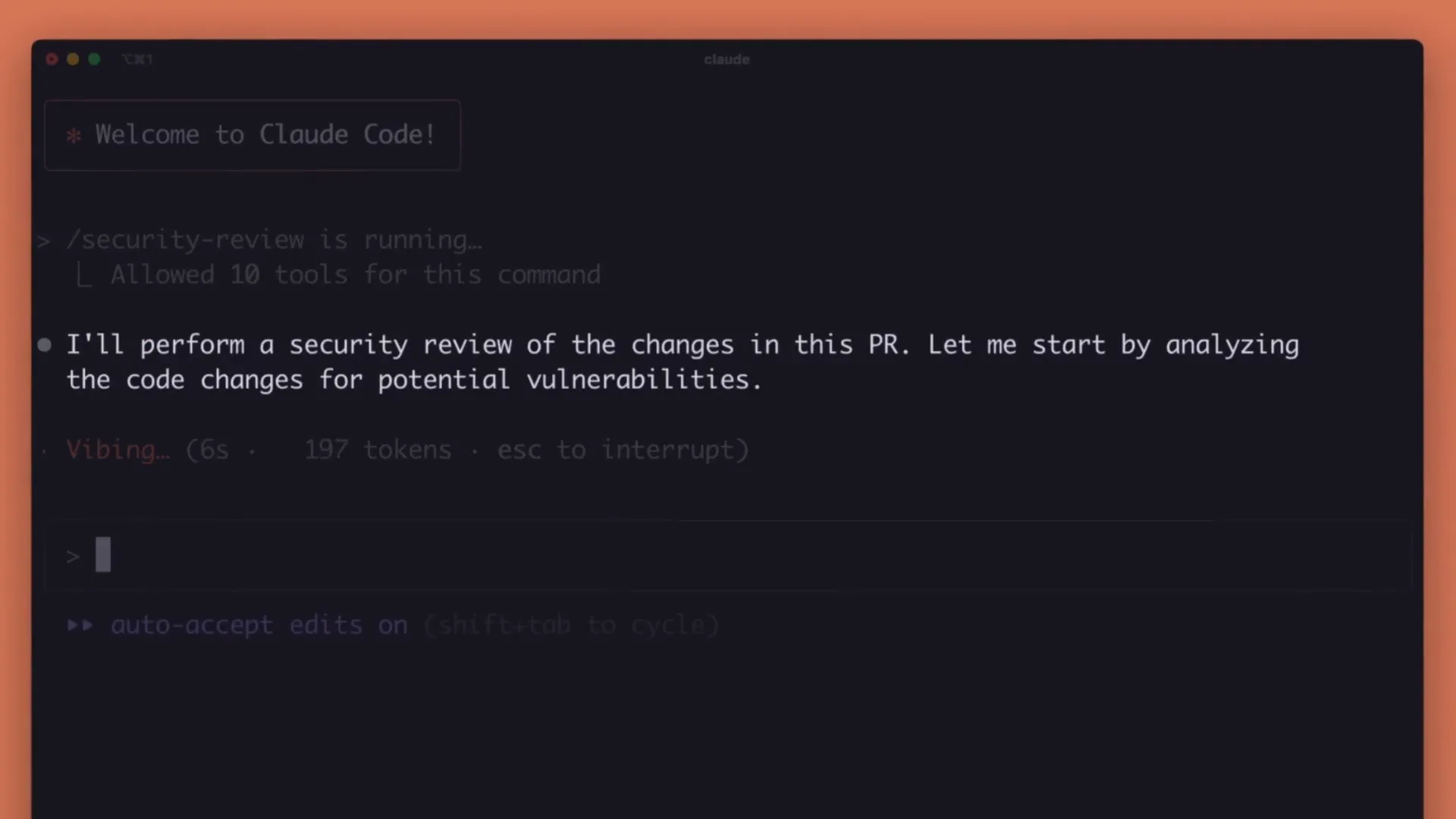

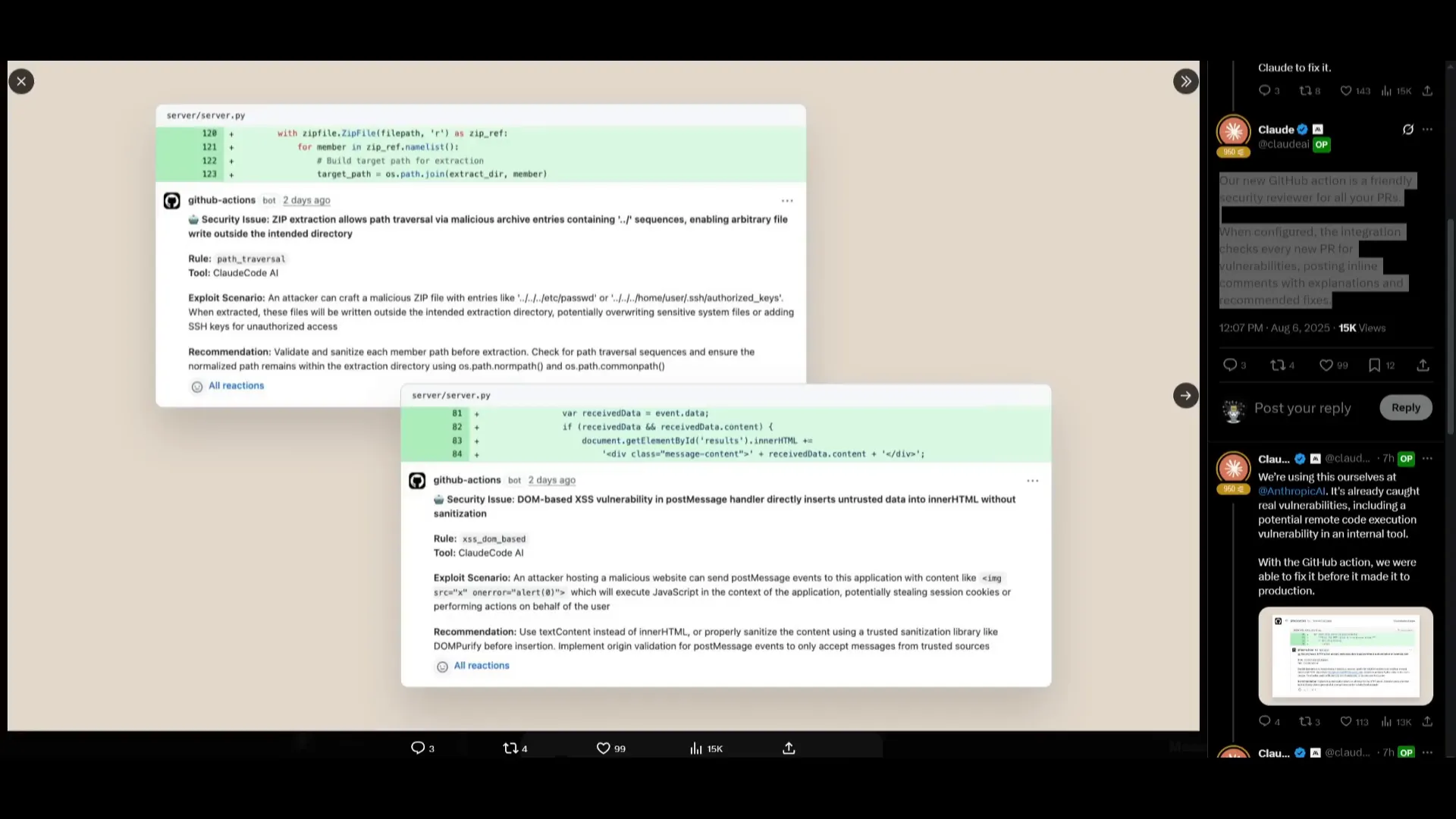

Alongside Claude Opus 4.1, Anthropic unveiled an exciting upgrade to Claude Code, their AI coding assistant. This update focuses on automated security reviews, a game changer for development teams prioritizing secure software delivery.

Here’s what the new Claude Code upgrade brings:

- On-Demand Security Reviews: A new slash command

/security-reviewallows developers to request security assessments directly from their terminal. - GitHub Actions Integration: Cloud Code can automatically run security checks on every pull request, scanning for vulnerabilities before code is merged or deployed.

- Vulnerability Detection: Claude now identifies a range of security issues including SQL injection risks, cross-site scripting (XSS), and insecure data handling.

- Automated Fixes: If vulnerabilities are found, Claude can directly fix and secure the code within Cloud Code, accelerating secure development cycles.

This upgrade effectively turns Claude into an AI engineer with an integrated security team, saving developers valuable time and reducing the risk of shipping vulnerable code.

The GitHub security reviewer integration is particularly powerful. It reviews every pull request, posts inline comments highlighting vulnerabilities, and can even suggest or apply fixes automatically. This kind of seamless security integration is unique and highly valuable for teams adopting continuous integration and deployment (CI/CD) workflows.

Final Thoughts: Is Claude Opus 4.1 Worth It?

Overall, Claude Opus 4.1 and the upgraded Claude Code represent solid steps forward for Anthropic in the AI coding space. Although the model comes with a high price tag, its improvements in multi-step reasoning, agentic workflows, and real-world coding make it a powerful tool for developers working on complex projects or in enterprise environments.

There have been some recent hiccups, such as the introduction of rate limits on Claude Sonnet 4, but these new upgrades and planned future improvements make Anthropic’s offerings increasingly attractive compared to other providers.

For cost-conscious developers, exploring open source alternatives like Kumi K2 or GLM 4.5 is advisable, especially for front-end or less critical tasks. However, for those needing top-tier coding assistance with integrated security features, Claude Opus 4.1 combined with the upgraded Claude Code is a compelling choice.

As we look ahead, Anthropic’s commitment to refining their models and adding innovative features bodes well for the future of AI-assisted software engineering.

Frequently Asked Questions (FAQ)

What is Claude Opus 4.1?

Claude Opus 4.1 is the latest upgrade to Anthropic’s AI language model series, focused on improved hybrid reasoning, agentic workflows, and real-world coding capabilities. It features a large 200k token context window and delivers higher precision and fewer bugs compared to its predecessor.

How does Claude Opus 4.1 perform compared to other AI models?

It performs exceptionally well on coding benchmarks, especially for complex software engineering tasks. While it doesn’t surpass OpenAI’s latest reasoning models or Google Gemini 2.5 Pro in general reasoning, it is more dominant in coding and tool use tasks.

Is Claude Opus 4.1 expensive to use?

Yes, the pricing remains high at $15 per million input tokens and $75 per million output tokens, which may be costly for regular developers or smaller projects.

What new features come with the Claude Code upgrade?

The Claude Code upgrade introduces automated security reviews accessible via terminal commands and GitHub Actions integration. It detects vulnerabilities like SQL injections and XSS and can automatically fix insecure code.

Can I use Claude Opus 4.1 through an API?

Yes, you can access Claude Opus 4.1 through Anthropic’s API by creating an API key, or via a Cloud subscription. It’s also accessible through third-party providers such as OpenRouter.

Are there cheaper alternatives to Claude Opus 4.1?

Yes, open source models like Kumi K2 and GLM 4.5 offer comparable coding performance at a lower cost, especially for front-end development.

What kind of projects is Claude Opus 4.1 best suited for?

It is ideal for developers and teams working on large codebases, building autonomous agents, or requiring AI models to assist with complex, multi-step coding and tool use workflows.

What improvements can we expect from Anthropic in the near future?

Anthropic has announced plans for substantial model improvements across their lineup in the coming weeks, signaling ongoing enhancements to performance and capabilities.

Stay Updated and Get Involved

If you want to keep up with the latest in AI development and get hands-on insights into models like Claude Opus 4.1, consider subscribing to AI newsletters, joining developer communities, and exploring API access. Staying informed is key as the AI landscape evolves rapidly.

Anthropic’s continuous progress with Claude Opus 4.1 and Claude Code shows exciting potential for AI-assisted coding workflows — blending power, precision, and security in ways that can transform how software is built and maintained.